[ad name=”Google Adsense 468_60″]

Ron Fredericks writes: Dr. Saptarshi Guha created an open-source interface between R and Hadoop called the R and Hadoop Integrated Processing Environment or RHIPE for short. LectureMaker was on the scene filming Saptarshi’s RHIPE presentation to the Bay Area’s useR Group, introduced by Michael E. Driscoll and hosted at Facebook’s Palo Alto office on March 9’th 2010. Special thanks to Jyotsna Paintal for helping me film the event.

Saptarshi received his Ph.D from Purdue University in 2010, having been advised by Dr. William S. Cleveland. Saptarshi works at Revolution Analytics in Palo Alto, as of the last update to this blog post.

Hadoop is an open source implementation of both the MapReduce programming model, and the underlying file system Google developed to support web scale data.

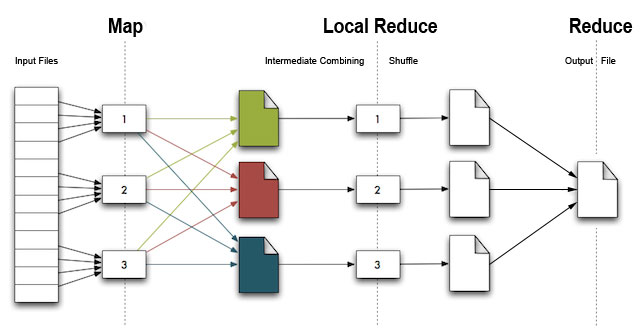

The MapReduce programming model was designed by Google to enable a clean abstraction between large scale data analysis tasks and the underlying systems challenges involved in ensuring reliable large-scale computation. By adhering to the MapReduce model, your data processing job can be easily parallelized and the programmer doesn’t have to think about the system level details of synchronization, concurrency, hardware failure, etc.

Reference: “5 common questions about Hadoop” a cloudera blog post May 2009 – by Christophe Bisciglia

RHIPE allows the R programmer to submit large datasets to Hadoop for a Map, Combine, Shuffle, and Reduce to process analytics at a high speed. See the figure below as an overview of the video’s key points and use cases.

[ad name=”Google Adsense 468_60″]

The RHIPE Video

Note: On May 30, 2013, LectureMaker’s new video player version 4.4 was put into use on this page… “This new version works with LectureMaker’s eCommerce, massive streaming storage, and eLearning plugins. In addition, now you can use the full screen feature, and see automated tool tip displays in the timeline” — Ron Fredericks, Co-founder LectureMaker LLC.

[kml_flashembed publishmethod=”static” fversion=”10.0.0″ useexpressinstall=”true” allowFullScreen=”true” movie=”http://www.lecturemaker.com/scripts/lmvideov4204_lm.swf” width=”858″ height=”524″ targetclass=”flashmovie” fvars=” vidName = rhipe_update1a_meta.flv; lecID = 1; lecSubDir = /RMeetUp2010/; imgPreLoad = LMPreloadImage_854_530.jpg; imgSubDir = /RMeetUp2010/; gaFID = 2011/02/rhipe; winPtr=http%3A%2F%2Fwww.google.com%2Fsearch%3Fq%3Dhadoop%2Brhipe%2Br%2Bprogramming%26hl%3Den%26num%3D10%26lr%3D%26ft%3Di%26cr%3D%26safe%3Dimages%26tbs%3Dqdr%253Am%2Cqdr%3Am; winTip=google search on Hadoop+R+RHIPE+programming (for the past month); vidWidth=858; vidHeight=480; autoNavDisplay=1; allowFullScreen=1; navDotParam = {Credits}{.9835}, {Q: What optimization methods were used?}{.937}, {RHIPE Lessons learned}{.854}, {RHIPE Todo list}{.7998}, {RHIPE on EC2\nSimulation timing}{.778}, {Q: What is the discrepancy between sampled data?}{.720}, {RHIPE on EC2\nIndiana bio-terrorism project}{.613}, {Another example\nDept. of Homeland Security}{.540 }, {Case study: VOIP summary}{.5269}, {Case study, step 5\nStatistical routines across subsets}{.4895}, {Case study, step 4\nCreate new objects}{.457 }, {Case study, step 3\nCompute summaries}{.4155}, {Case study, step 2\nFeed data to a reduce}{.354 }, {Case study, step 1\nConvert raw data to R dataset}{.303 }, {Case study: VOIP}{.244 }, {High performance computing with RHIPEnMapReduce and R}{.155 }, {High performance computing with existing R packages}{.136 }, {Overview of Hadoop}{.081 }, {Analysis of very large data sets}{.043 }, {Introduction\nRHIPE}{.030 }, {Introduction\nSaptarshi Guha}{.0095}, {Beginning}{.000 }”]

[/kml_flashembed]

Video Topics and Navigation Table

[table id=2 /]

Code Examples from the Video

Source code highlighter note: R and RHIPE language constructs are color coded and hot-linked to appropriate online resources. Click on these links to learn more about these programming features. I manage the R/RHIPE source code highlighter project on my engineering site here: R highlighter.

Move Raw Data Into Hadoop File System for Use In R Data Frames

## Case Study - VoIP

## Copy text data to HDFS

rhput('/home/sguha/pres/voip/text/20040312-105951-0.iprtp.out','/pres/voip/text')

## Use RHIPE to convert text data :

## 1079089238.075950 IP UDP 200 67.17.54.213 6086 67.17.50.213 15074 0

## ...

## to R data frames

input < - expression({

## create components (direction, id.ip,id.port) from Sys.getenv("mapred.input.file")

v <- lapply(seq_along(map.values),function(r) {

value0 <- strsplit(map.values[[r]]," +")[[1]]

key <- paste(value0[id.ip[1]],value0[id.port[1]],value0[id.ip[2]]

,value0[id.port[2]],direction,sep=".")

rhcollect(key,value0[c(1,9)])

})})

Submit a MapReduce Job Then Retrieve semi-calls

## Case Study - VoIP

## We can run this from within R:

mr< -rhmr(map=input,reduce=reduce, inout=c('text','map'), ifolder='/pres/voip/text', ofolder='/pres/voip/df',jobname='create'

,mapred=list(mapred.reduce.tasks=5))

mr <- rhex(mr)

## This takes 40 minutes for 70 gigabytes across 8 computers(72 cores).

## Saved as 277K data frames(semi-calls) across 14 gigabytes.

## We can retrieve semi-calls:

rhgetkey(list('67.17.50.213.5002.67.17.50.6.5896.out'),paths='/pres/voip/df/p*')

## a list of lists(key,value pairs)

Compute Summaries With MapReduce

## Case Study - VoIP

m< -expression({

lapply(seq_along(map.values),function(i){

## make key from map.keys[[i]]

value<-if(tmp[11]=="in")

c(in.start=start,in.end=end,in.dur=dur,in.pkt=n.pkt)

else

c(out.start=start,out.end=end,out.dur=dur,out.pkt=n.pkt)

rhcollect(key,value)

})

})

r<-expression(

pre={

mydata<-list()

ifnull <- function(r,def=NA) if(!is.null(r)) r else NA

},reduce={

mydata<-append(mydata,reduce.values)

},post={

mydata<-unlist(mydata)

in.start<-ifnull(mydata['in.start'])

.....

out.end<-ifnull( mydata['out.end'] )

out.start<-ifnull(mydata['out.start'])

value<-c(in.start,in.end,in.dur,in.pkt,out.start,out.end,out.dur,out.pkt)

rhcollect(reduce.key,value)

})

Compute Summaries With MapReduce Across HTTP and SSH Connections

## Example Compute total bytes, total packets across all HTTP and SSH connections.

m < - expression({

w <- lapply(map.values,function(r)

if(any(r[1,c('sport','dport')] %in% c(22,80))) T else F)

lapply(map.values[w],function(v){

key <- if(22 %in% v[1,c('dport','sport')]) 22 else 80

rhcollect(key, c(sum(v[,'datasize']),nrow(v)))

})})

r < - expression(

pre <- { sums <- 0 }

reduce <-{

v <- do.call("rbind",reduce.values)

sums <- sums+apply(v,2,sum)

},post={

rhcollect(reduce.key,c(bytes=sums[1],pkts=sums[2]))

})

Load RHIPE on an EC2 Cloud

library(Rhipe)

load("ccsim.Rdata")

rhput("/root/ccsim.Rdata","/tmp/")

setup < - expression({

load("ccsim.Rdata")

suppressMessages(library(survstl))

suppressMessages(library(stl2))

})

chunk <- floor(length(simlist)/ 141)

z <- rhlapply(a,cc_sim, setup=setup,N=chunk,shared="/tmp/ccsim.Rdata"

,aggr=function(x) do.call("rbind",x),doLoc=TRUE)

rhex(z)

References:

“I just watched the Saptarshi Guha video. It looks great!! Thank you! The picture is incredibly crisp, and the timeline tab is a nice touch for reviewing the film. Thank you!” — Matt Bascom

VMware’s open-source partnership with Cloudera offers you a virtual machine with Hadoop, PIG, and HIVE – download

The University of Purdue hosts the documentation and open-source code base for RHIPE – download

This is what the share text above looks like…

Presented by Saptarshi Guha

Leave a Reply